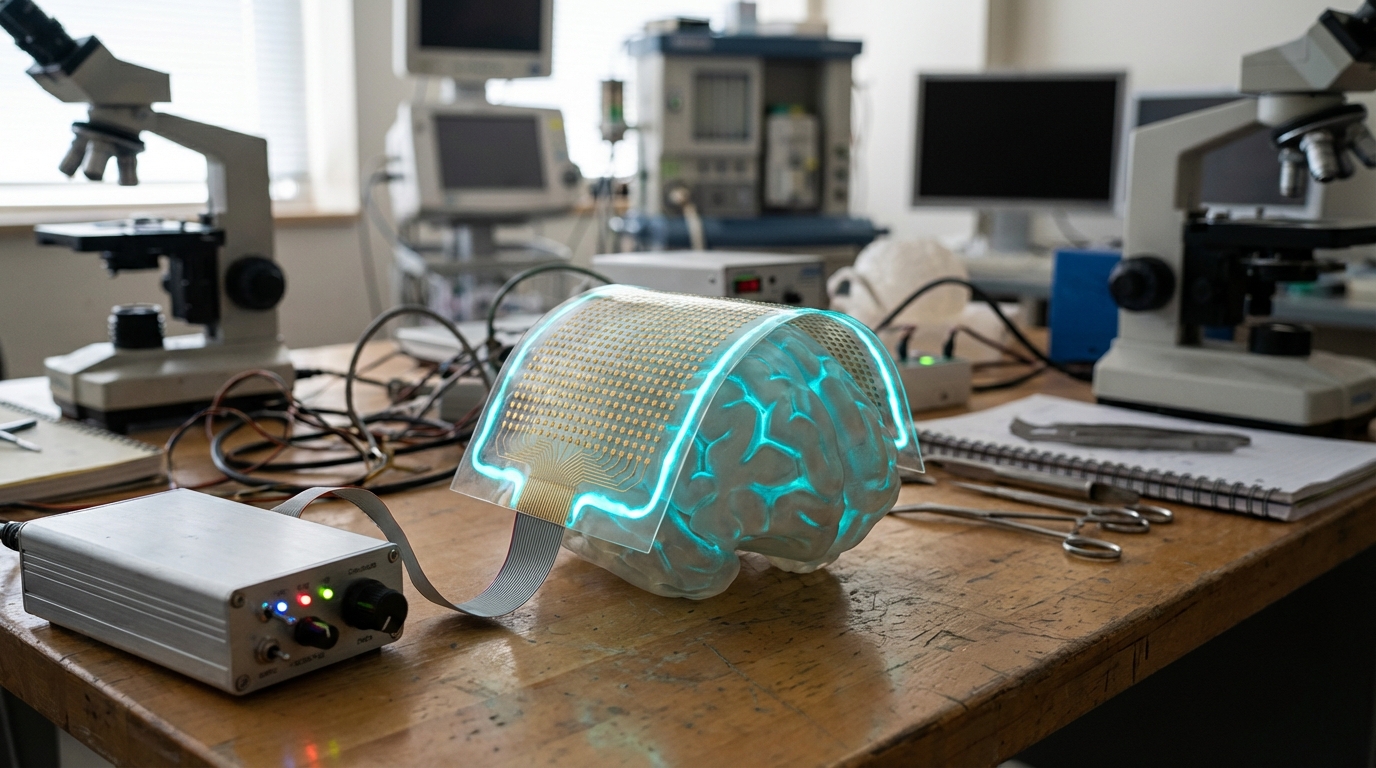

Neural Foundation Models

Neural foundation models are large-scale AI models (similar in concept to GPT for language, but for neural signals) that are pre-trained on massive, diverse datasets of brain recordings from both intracranial (implanted) and non-invasive (EEG, MEG) sources, learning general patterns of neural activity that can be applied across different individuals and tasks. These models, sometimes called 'NeuroGPT' concepts, enable few-shot decoding for new users (requiring minimal calibration data) and transfer learning across different brain-computer interface tasks, dramatically reducing the time and data needed to set up BCIs for new users or new applications by leveraging knowledge learned from many previous users and tasks.

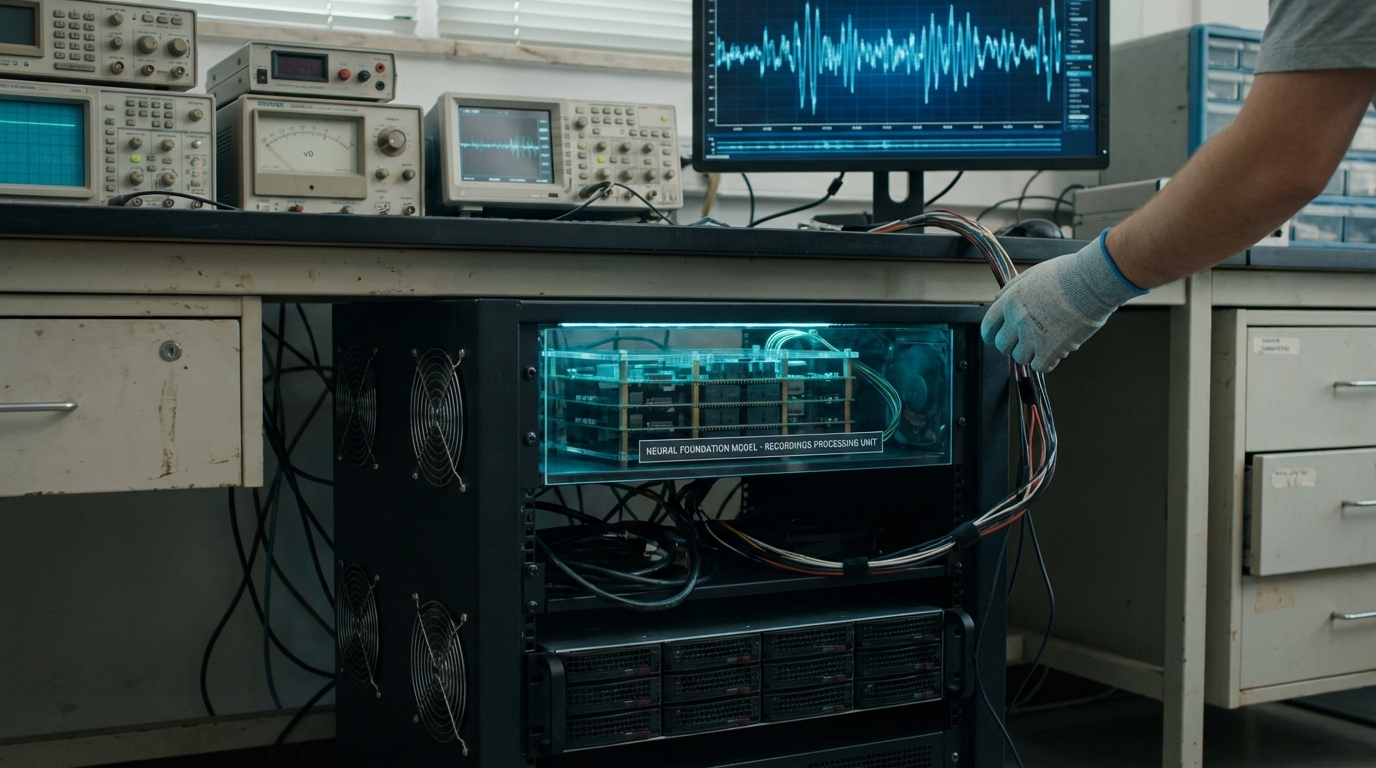

This innovation addresses the major limitation of current BCIs, where each user requires extensive calibration and training data, making setup time-consuming and limiting usability. By pre-training on large datasets, these models can generalize across users and tasks. Research institutions and companies are developing these models.

The technology is particularly significant for making BCIs more practical and accessible, where reducing calibration time could dramatically improve usability. As the technology improves, it could enable plug-and-play BCIs. However, ensuring generalization across individuals, managing data privacy, and achieving reliable performance remain challenges. The technology represents an important evolution in BCI software, but requires continued development to achieve the reliability and generalization needed for widespread use. Success could make BCIs much more practical, but the technology must prove that models can reliably generalize across the diversity of human brains.