Brain-State Decoders

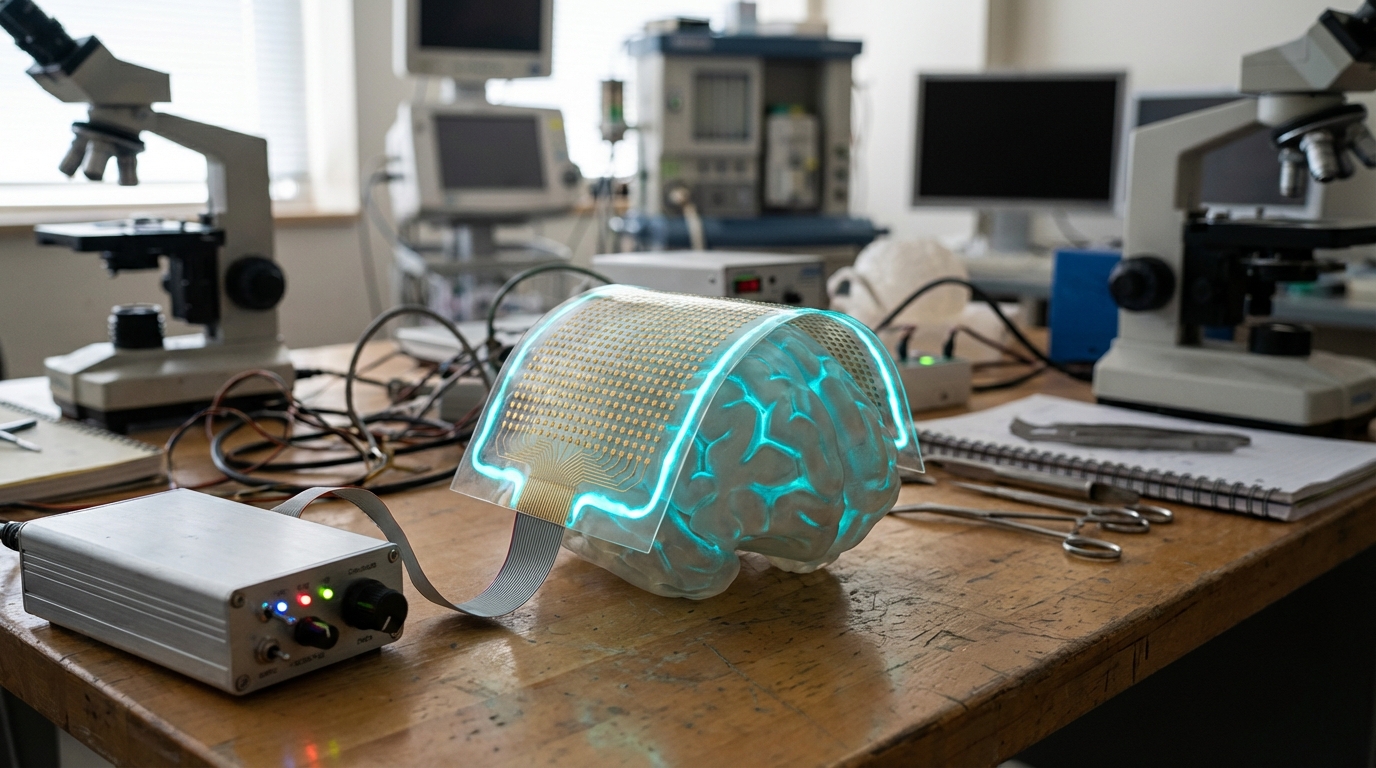

Brain-state decoders are machine learning models that fuse multimodal neural and physiological data including EEG (electroencephalography), fNIRS (functional near-infrared spectroscopy), and peripheral physiological signals (like heart rate, skin conductance) to classify cognitive states such as attention, vigilance, fatigue, stress, or engagement in real-time, enabling systems that can adapt to the user's mental state. These decoders allow adaptive interfaces like cockpit displays, personalized learning systems, or assistive technologies to respond dynamically to the user's cognitive state, for example by adjusting difficulty, providing alerts when attention wanes, or modifying the interface when stress is detected, creating more responsive and effective human-computer interactions.

This innovation addresses the need for systems that can adapt to human cognitive state, where interfaces that don't account for mental state may be ineffective or even dangerous. By detecting cognitive states, these systems can provide better support. Research institutions and companies are developing these technologies.

The technology is particularly valuable for safety-critical applications like aviation or for personalized learning, where adapting to cognitive state could improve outcomes. As the technology improves, it could enable new applications in human-computer interaction. However, ensuring accuracy, managing privacy, and demonstrating value remain challenges. The technology represents an important direction for adaptive interfaces, but requires continued development to achieve the reliability needed for practical use. Success could enable more responsive and effective human-computer interactions, but the technology must prove its accuracy and value in real-world applications.