In-Memory Computing Chips

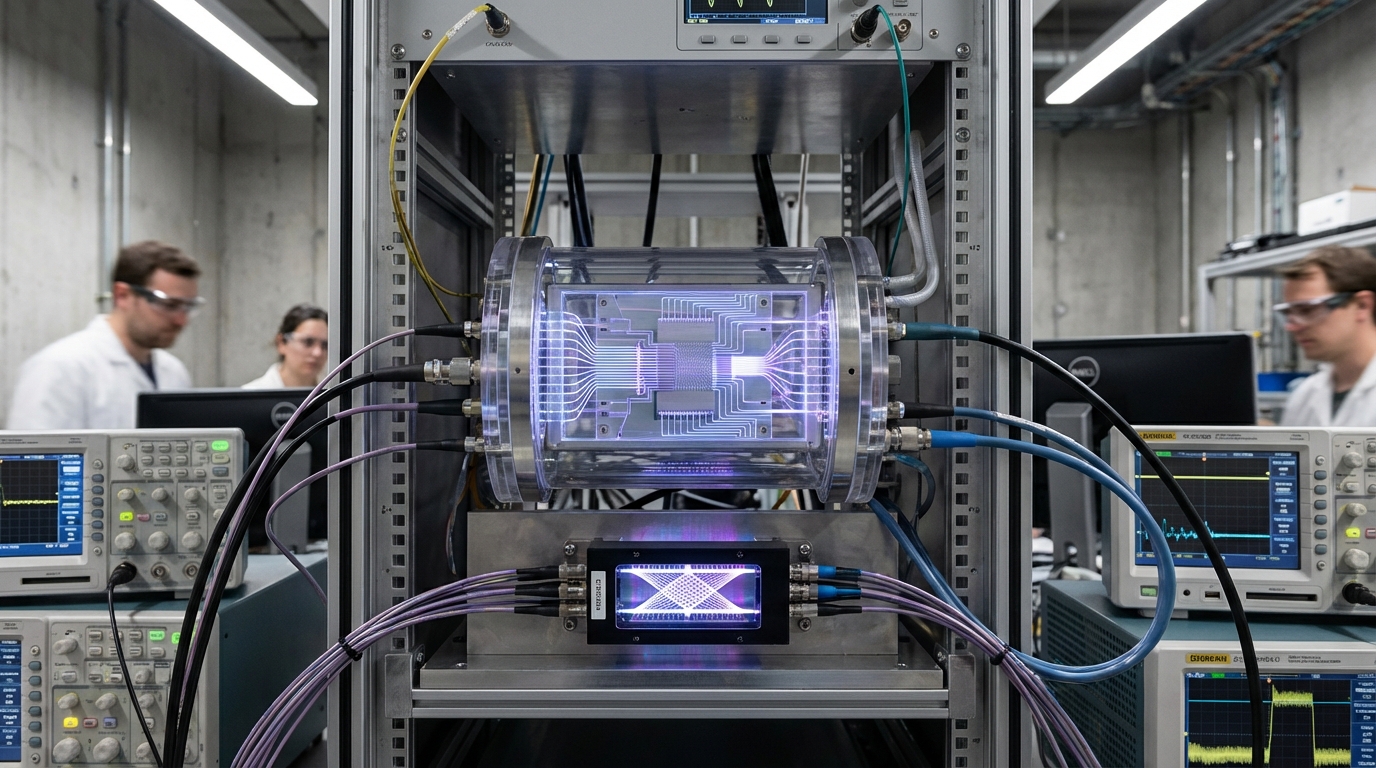

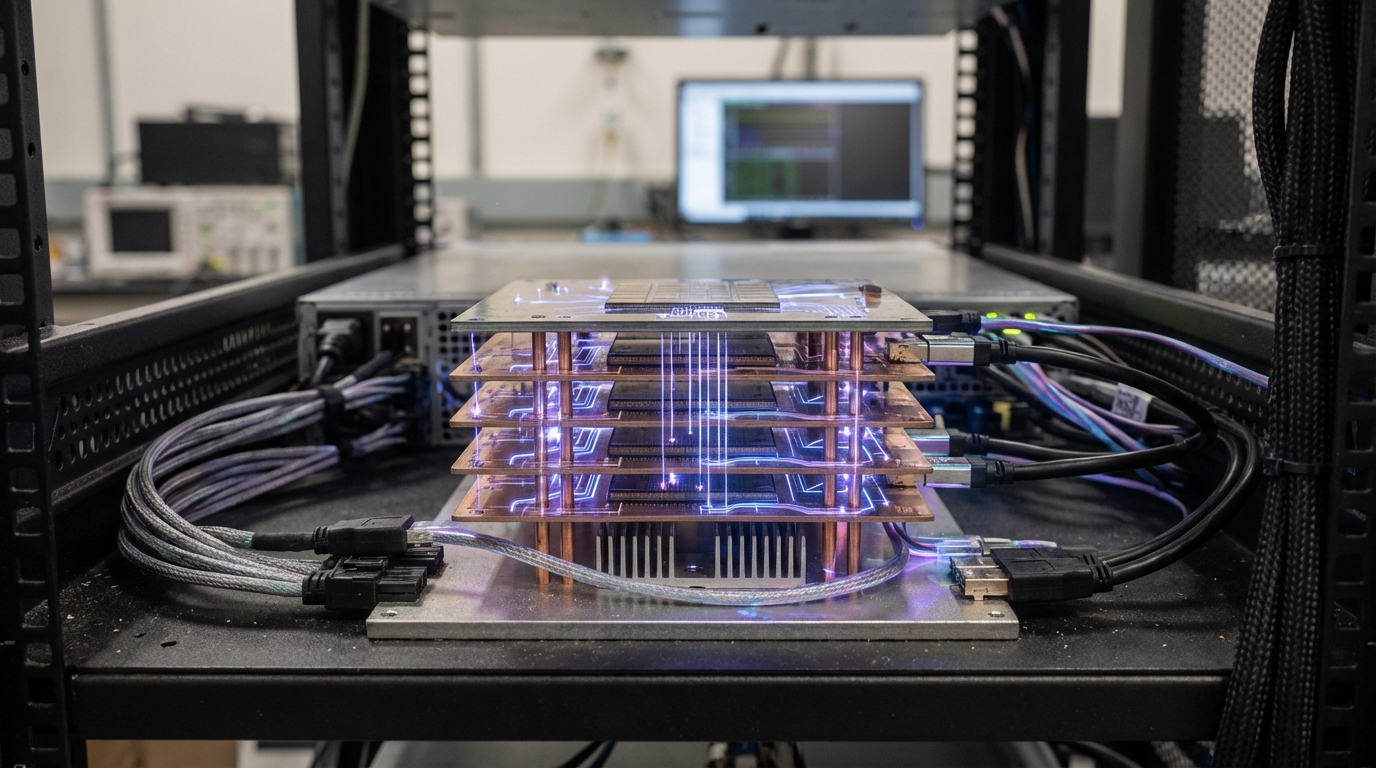

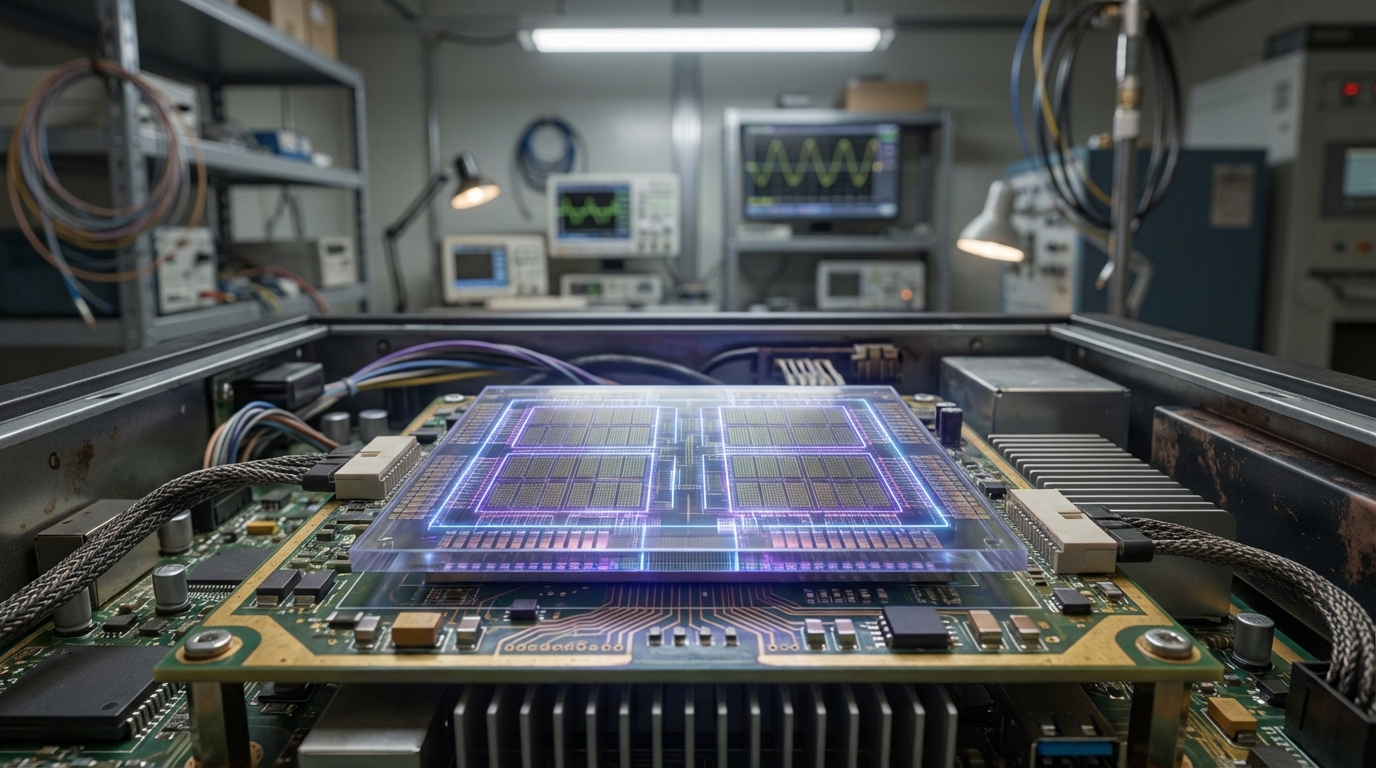

In-memory computing chips integrate processing logic directly within memory arrays, performing calculations where data is stored rather than moving data to separate processors. These systems use various memory technologies including SRAM, resistive RAM (ReRAM), or phase-change memory, combining memory cells with arithmetic units to execute operations in-place, dramatically reducing energy consumption and latency compared to traditional von Neumann architectures.

This innovation addresses the fundamental inefficiency of traditional computer architectures, where moving data between processors and memory consumes significant energy and time. By computing where data resides, in-memory chips can achieve orders-of-magnitude improvements in energy efficiency for AI workloads, particularly transformer models used in language processing and other applications. Companies like Mythic, Syntiant, and various research institutions are developing these technologies, with some chips already deployed in edge devices.

The technology is particularly valuable for edge AI applications where power efficiency is critical, such as autonomous vehicles, robots, and satellites that must operate on limited power budgets. As transformer models become the foundation of modern AI, in-memory computing offers a pathway to deploying these powerful models in resource-constrained environments. However, the technology faces challenges including precision limitations, manufacturing variability, and the need for algorithms optimized for in-memory computation.