Analog In-Memory Compute Chips

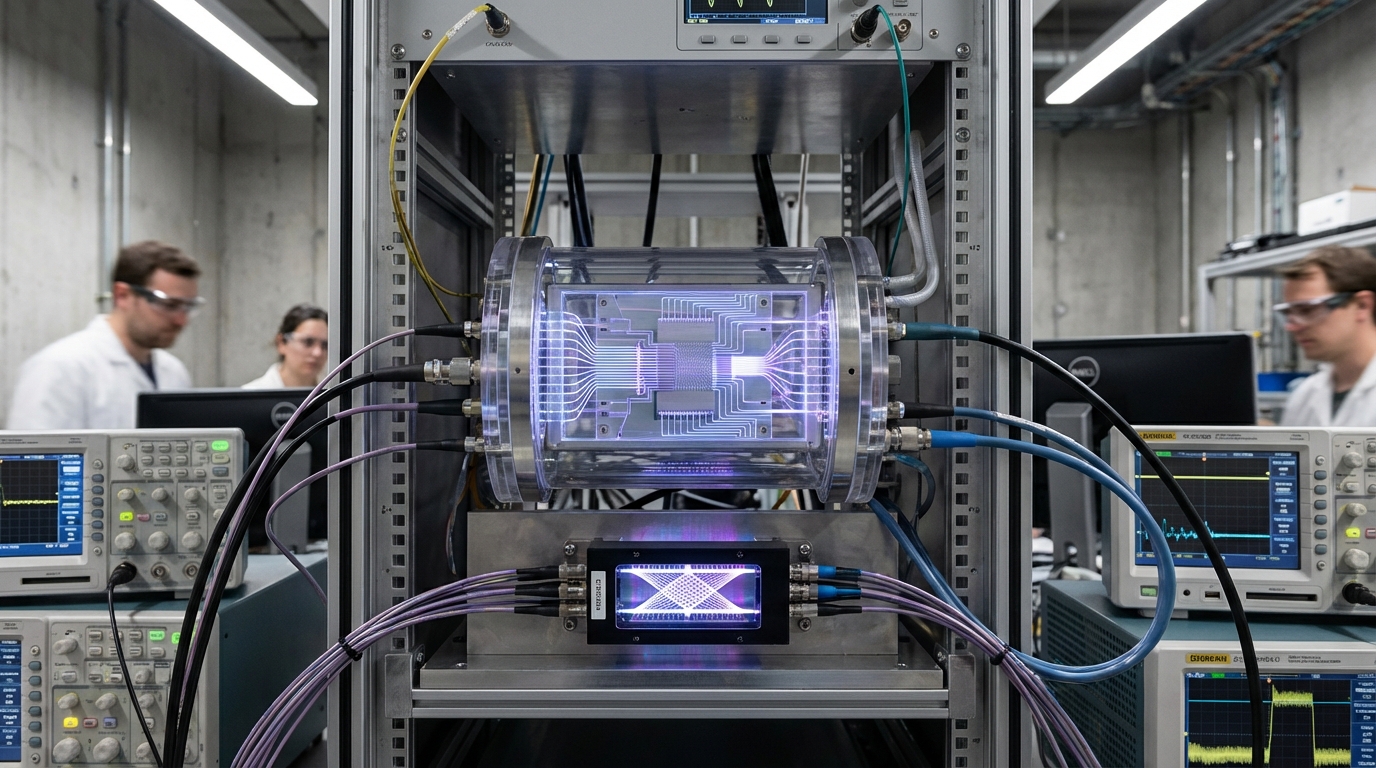

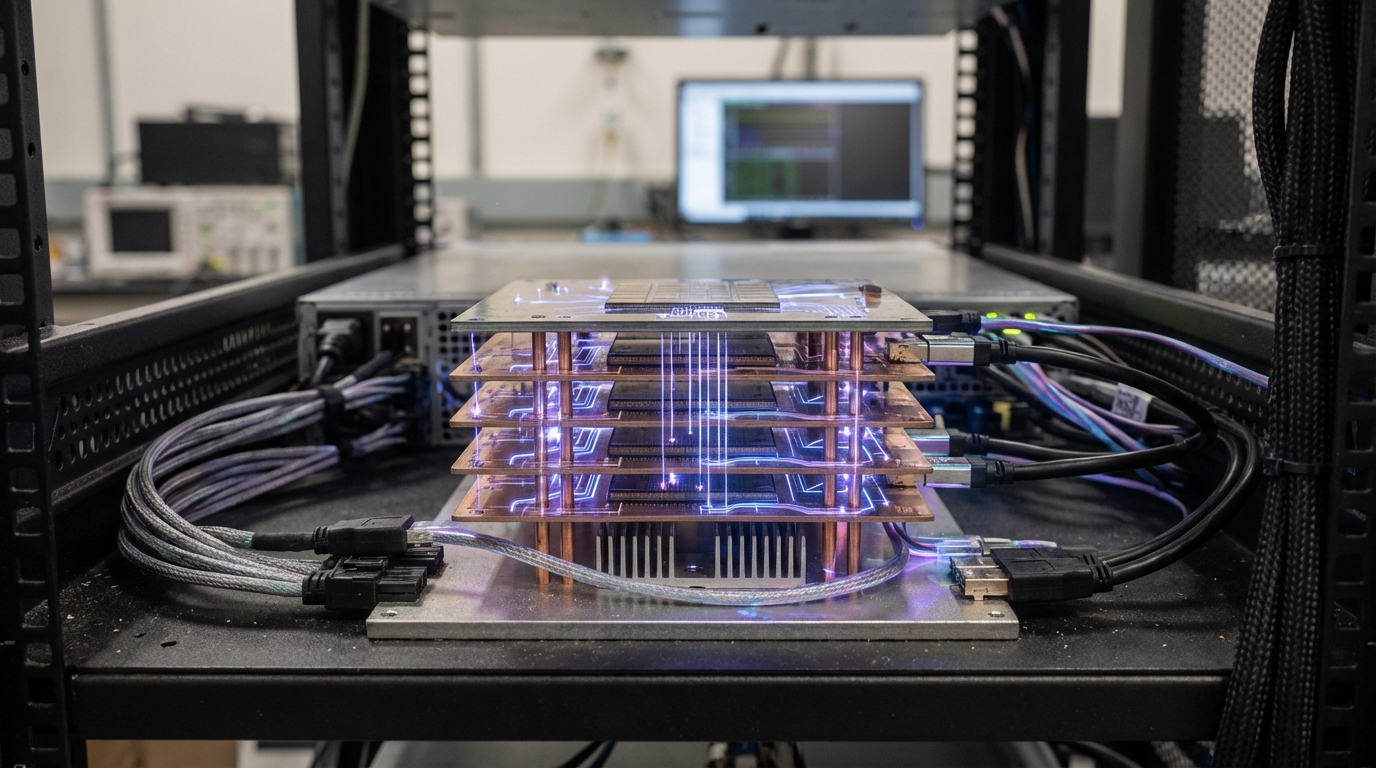

Analog in-memory compute chips perform calculations directly within memory arrays where data is stored, eliminating the von Neumann bottleneck where processors must constantly fetch data from separate memory. These chips use analog circuits to perform matrix operations in-place, using the physical properties of memory cells (like resistance or capacitance) to compute results, dramatically reducing energy consumption and latency compared to traditional digital processors.

This innovation addresses the massive energy consumption of AI systems, where data movement between processors and memory accounts for a significant portion of power usage. By computing where data resides, analog in-memory chips can achieve orders-of-magnitude improvements in energy efficiency for AI workloads, particularly transformer models and continual learning tasks. Companies like Mythic, Syntiant, and various research institutions are developing these technologies, with some chips already deployed in edge devices.

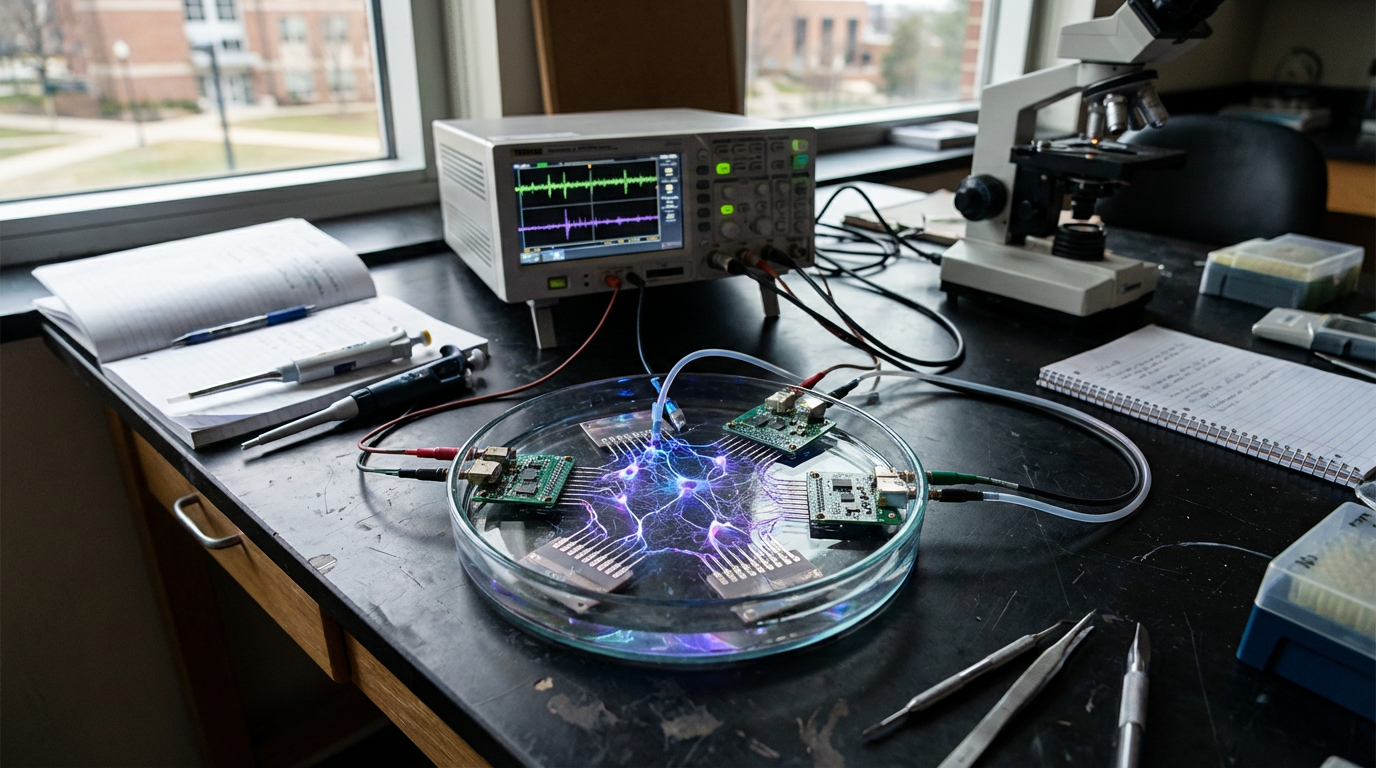

The technology is particularly valuable for edge AI applications where power constraints are critical, such as mobile devices, IoT sensors, and autonomous systems. As AI becomes more pervasive and energy efficiency becomes a competitive advantage, analog in-memory computing offers a pathway to deploying sophisticated AI capabilities in power-constrained environments. However, the technology faces challenges including precision limitations, manufacturing variability, and the need for specialized design tools and algorithms optimized for analog computation.