Analog AI Accelerators

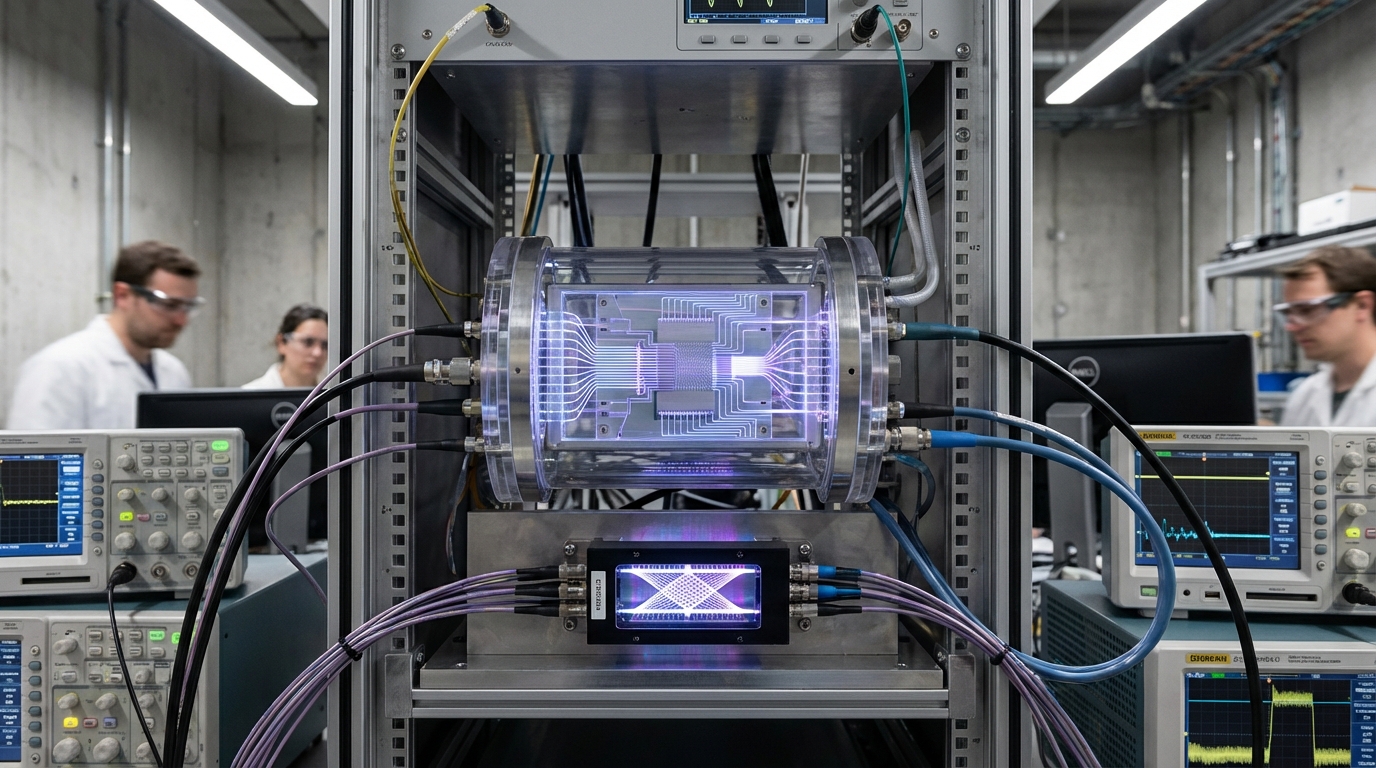

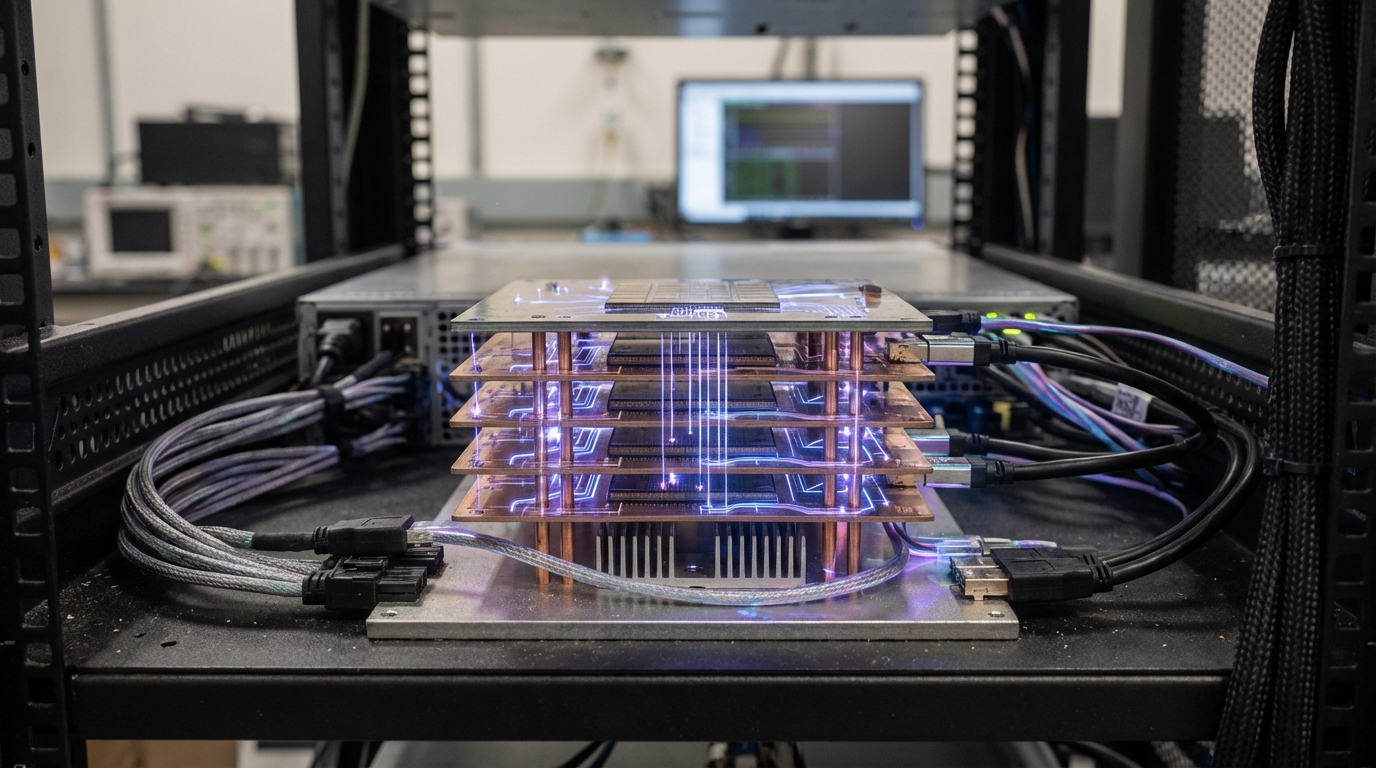

Analog AI accelerators use continuous physical processes—such as charge accumulation in capacitors or optical interference in photonic circuits—to perform the matrix multiplications that are central to neural network computation. Unlike digital processors that represent values as discrete bits, analog systems work with continuous values, enabling highly efficient computation that can achieve orders-of-magnitude better energy efficiency (measured in TOPS per watt—trillions of operations per second per watt) than digital GPUs.

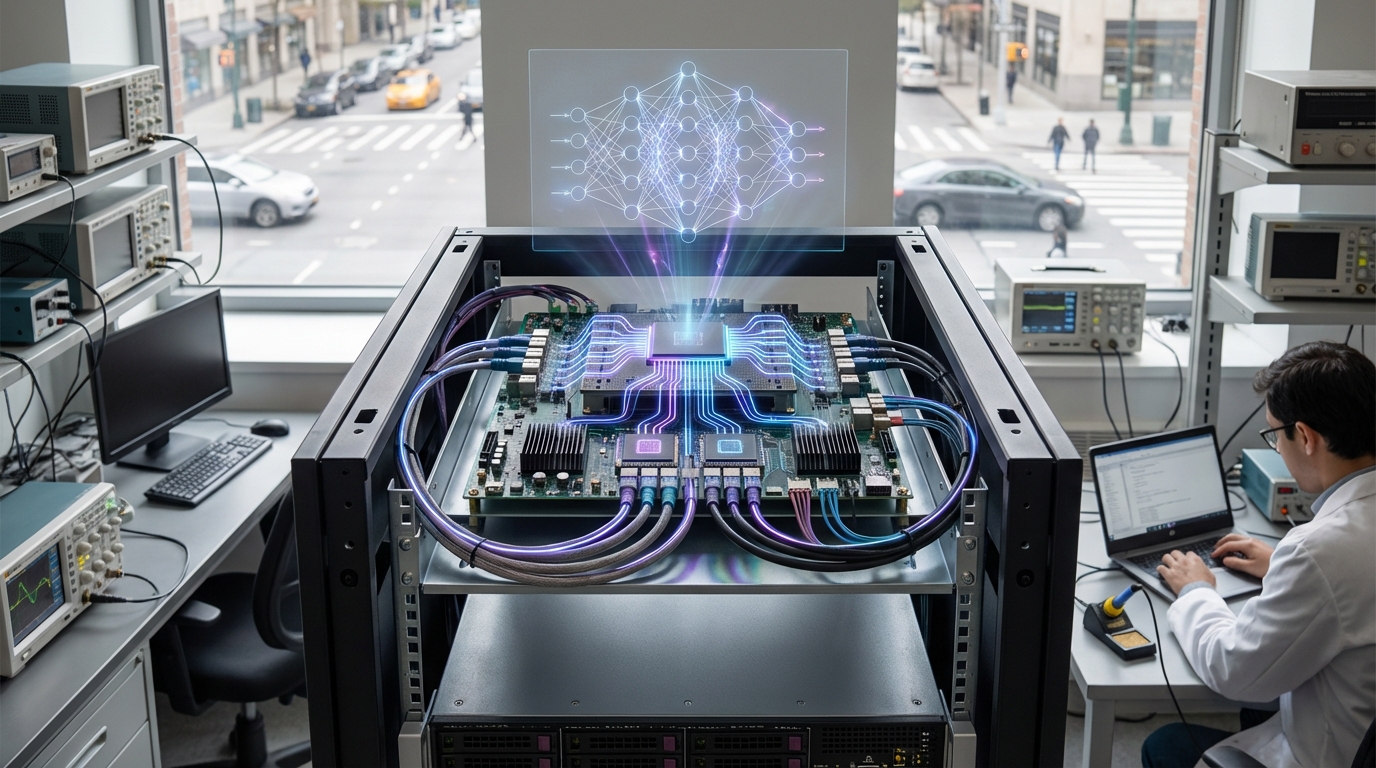

This innovation addresses the massive energy consumption of AI inference in data centers, where running large language models and other AI systems requires enormous computational resources. By using analog computation optimized for AI workloads, these accelerators can dramatically reduce power consumption for inference tasks, making AI more sustainable and cost-effective. Companies are developing these technologies for data center deployment, with some systems already being piloted for specific workloads like search, recommendations, and AI copilots.

The technology is particularly significant as AI inference scales to serve billions of users, where energy efficiency becomes both an economic and environmental imperative. As enterprises deploy AI more broadly, analog accelerators could enable more sustainable and cost-effective AI infrastructure. However, the technology faces challenges including precision limitations, the need for calibration, and the difficulty of supporting the full range of AI operations in analog, requiring hybrid analog-digital systems for complete AI workloads.