Neural light-field cameras

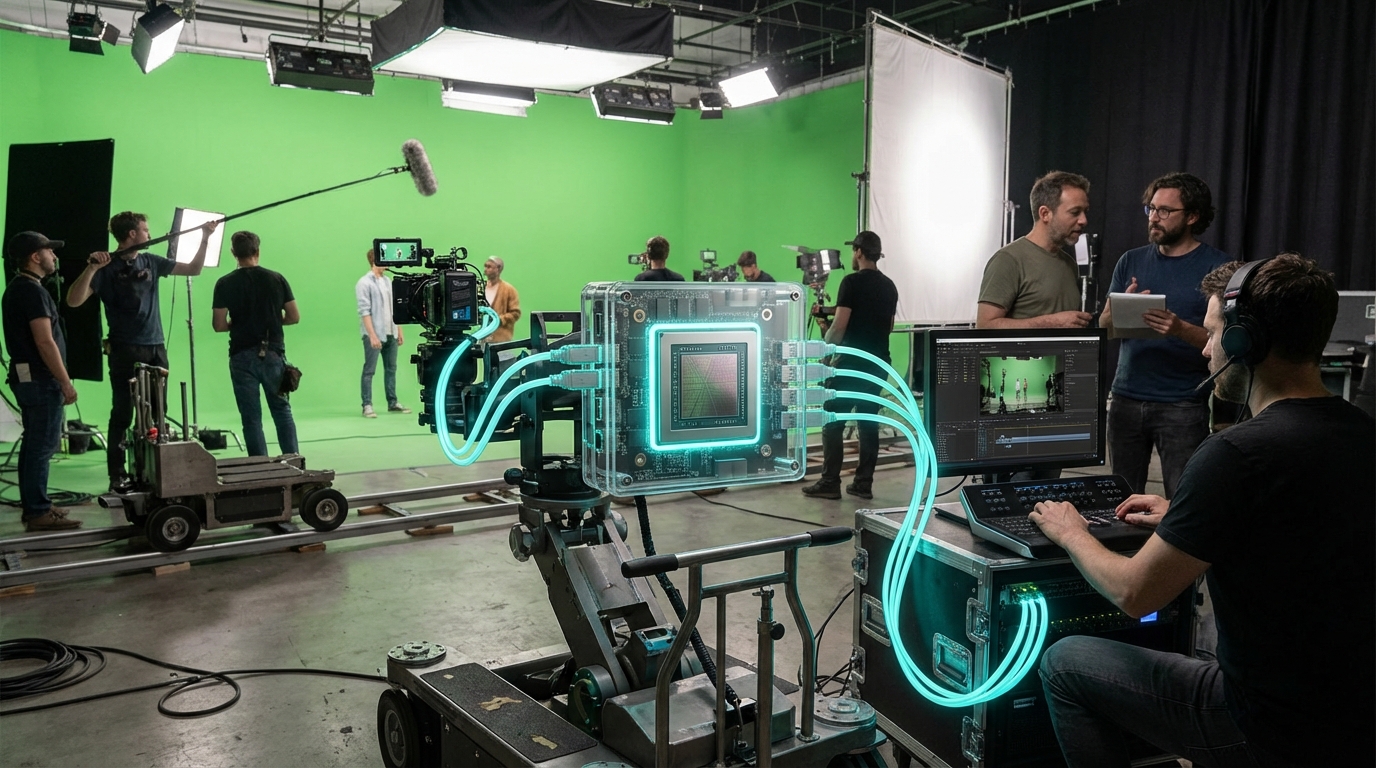

Neural light-field cameras combine dense plenoptic sensor arrays with neural radiance field reconstruction so every pixel stores both intensity and directional information. Instead of stitching a handful of viewpoints, the capture rig samples thousands of micro-baselines and uses transformer-style encoders to learn a continuous representation of a scene. The result is a manipulable volumetric asset where focus, parallax, and depth of field can be adjusted after the shoot, enabling editors to treat light itself as editable data.

For media producers this collapses the gap between live action and CG pipelines. Immersive studios such as Arcturus, 8i, and Canon’s Kokomo group are exploring light-field stages for volumetric actors, while sports broadcasters see it as a path to holographic replays without the cost of motion-capture suits. Because neural reconstruction understands surface normals and materials, downstream teams can relight or restage performances for AR lenses, mobile volumetric stories, or mixed-reality concerts without pulling talent back on set.

Adoption remains early (TRL 4) because rigs are expensive and neural reconstruction still requires GPU farms, yet the trajectory mirrors the early days of digital cinema. Research labs are optimizing sparse-camera solutions, and standardization efforts within SMPTE and the Metaverse Standards Forum aim to define interoperable light-field codecs. As those pieces mature, neural light-field capture is poised to become the master format for next-gen storytelling, feeding everything from cinematic VR to adaptive advertising.