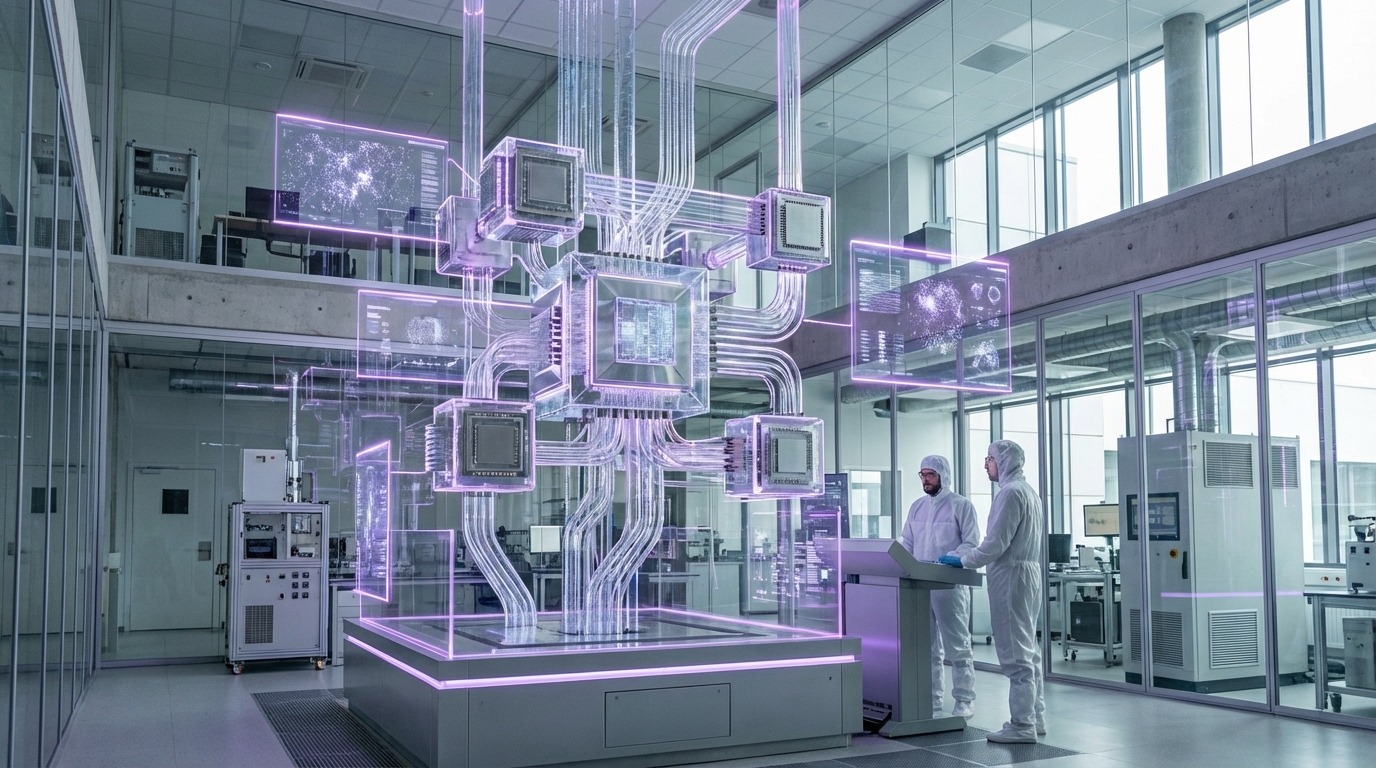

Artificial Superintelligence

AI systems surpassing human cognitive capabilities across all domains.

Artificial superintelligence (ASI) refers to AI systems that significantly surpass human intelligence across all domains of cognitive ability, including scientific creativity, general wisdom, and social skills. Unlike artificial general intelligence (AGI), which matches human-level intelligence, ASI would exceed human capabilities in every measurable way. Such systems could potentially improve themselves recursively, leading to rapid capability growth that could quickly outpace human comprehension and control.

The emergence of ASI could represent a fundamental transformation of human civilization, potentially solving problems that have eluded humanity for centuries—disease, aging, climate change, resource scarcity—while also posing existential risks if not developed and controlled carefully. ASI could accelerate scientific and technological progress beyond human capacity, potentially making human researchers obsolete in many fields. The technology raises profound questions about control, alignment with human values, and the future role of humanity in a world with superintelligent entities.

At TRL 2, artificial superintelligence remains theoretical, with no clear path to development and active debate about whether it's even possible or desirable. Research in AI safety, alignment, and control is exploring how such systems might be developed safely, though many experts believe we're decades or longer away from ASI, if it's achievable at all. The technology faces fundamental challenges including understanding intelligence itself, ensuring AI systems remain aligned with human values as they become more capable, and developing control mechanisms for systems that may be far more intelligent than their creators. However, given the potential impact—both positive and negative—research into ASI safety and development is considered critical. If ASI is eventually developed, it could be humanity's most significant achievement or greatest challenge, fundamentally reshaping civilization in ways that are difficult to predict.

Follow us for weekly foresight in your inbox.