Ultrasonic mid-air haptic displays

Ultrasonic mid-air haptic displays use dense phased arrays of transducers that modulate sound pressure at 40 kHz and higher, steering constructive interference to precise points in space. By rapidly shifting those focal points the system paints force fields that skin mechanoreceptors perceive as texture, taps, or airflow—without requiring gloves or wearables. Modern prototypes synchronize with optical tracking so the haptic focal point follows a user’s hands, letting audiences feel volumetric story elements as they materialize.

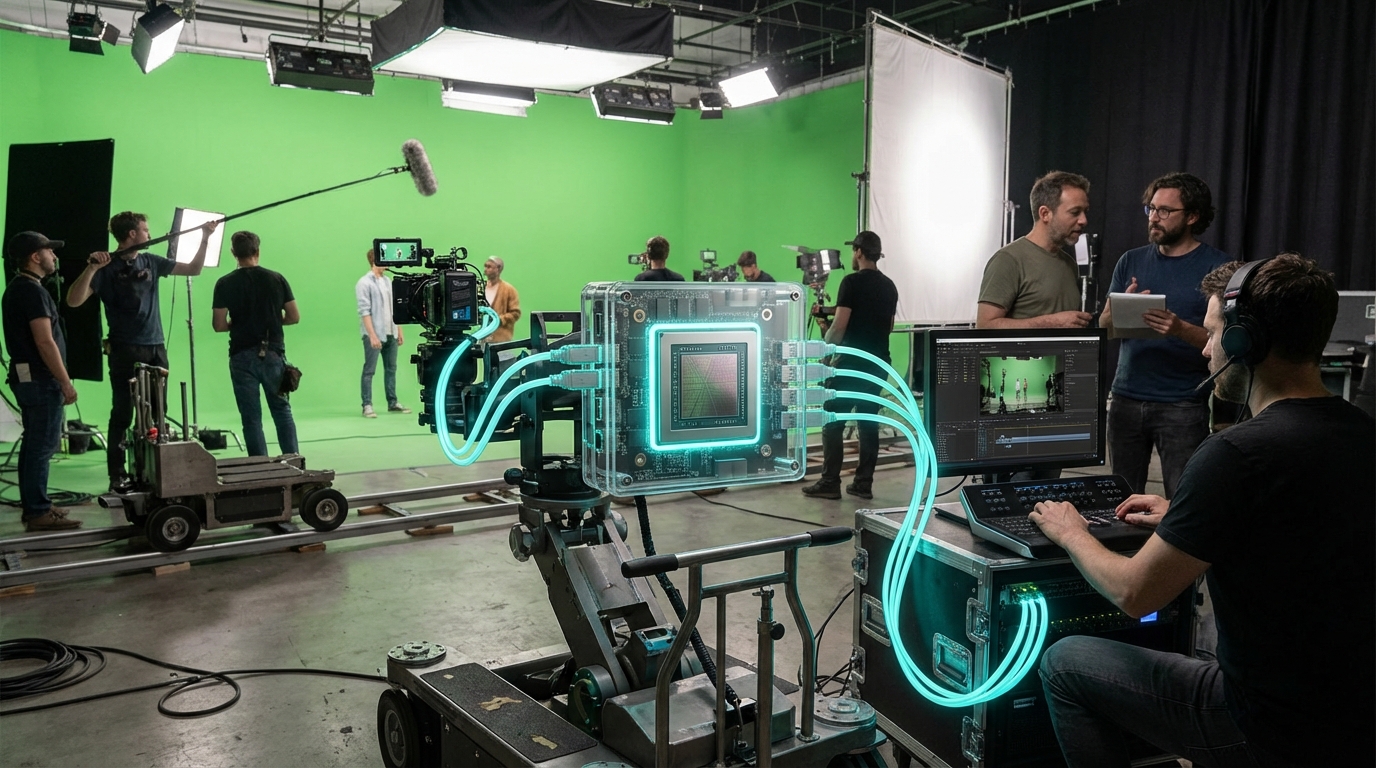

For experiential media creators this unlocks contact-rich interactions in museum exhibits, retail pop-ups, or virtual production stages where hygiene, accessibility, and quick throughput matter. Companies like Ultraleap, Imverse, and Disney Research pair the arrays with particle simulations so visitors can “press” holographic buttons, feel raindrops during narrative beats, or get haptic cues in live mixed-reality performances. The absence of attachment points also simplifies compliance for multi-user venues or theme parks where hardware must withstand constant operation.

The technology sits around TRL 3–4: fielded in pilot installations but still evolving toward slimmer modules, wider interaction volumes, and richer sensations. Integration work focuses on authoring tools that let motion-graphics teams keyframe haptic events alongside light and sound, plus safety frameworks for sustained exposure. As chipmakers develop integrated driver ASICs and content platforms expose haptic APIs, mid-air ultrasound feedback is expected to become a default layer for immersive stages, broadcast studios, and XR arcades.