Generative Hardware Accelerators

Generative hardware accelerators bake transformer-friendly tensor cores, on-die SRAM, and sparsity-aware schedulers into phone, console, and camera chipsets so diffusion models and LLMs can run locally. Vendors such as Apple, Qualcomm, MediaTek, and startups like Tenstorrent co-design hardware and runtimes that keep attention layers resident in fast memory, compress activations with low-bit quantization, and integrate ISP pipelines so text-to-image or audio synthesis happens in milliseconds on-device. These accelerators also expose APIs for video upscaling, speech synthesis, and style transfer.

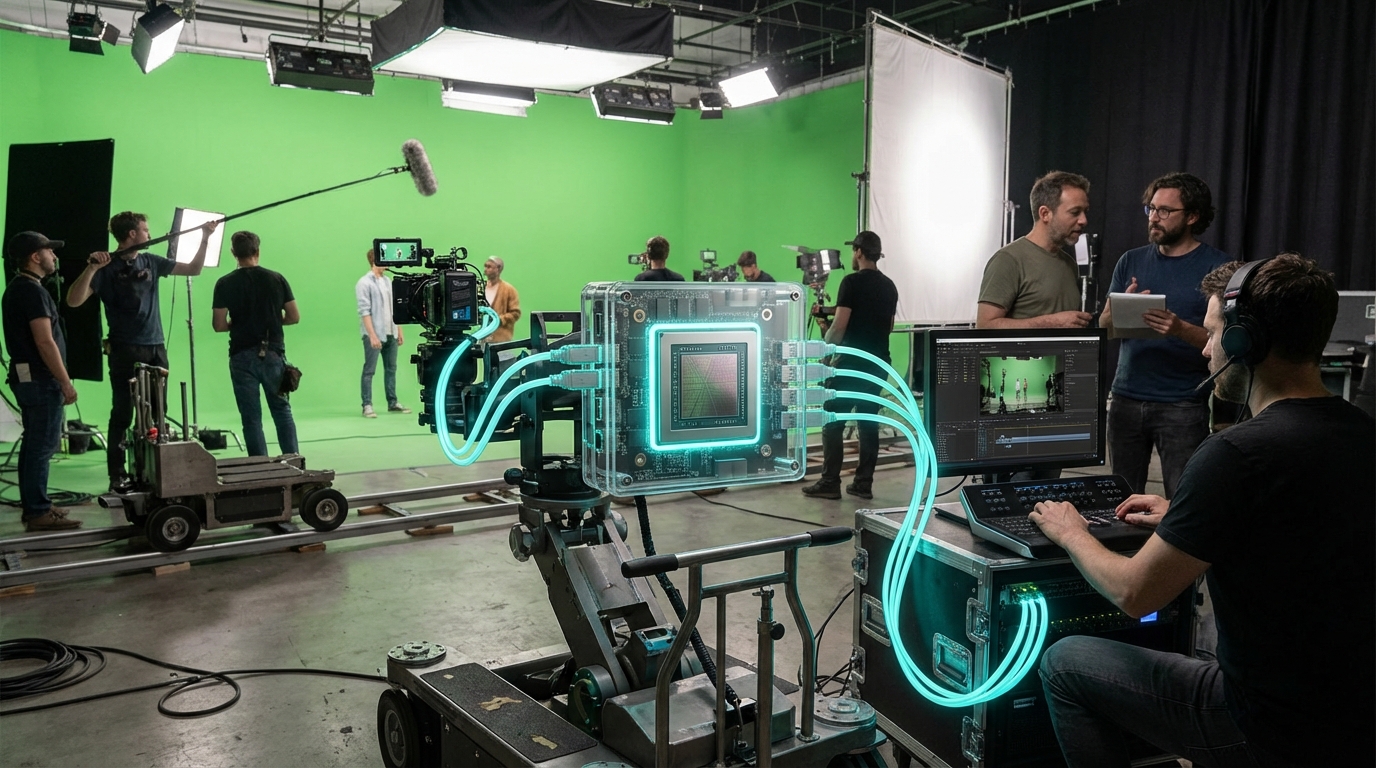

Moving generative workloads to the edge removes cloud latency and reduces inference costs for media apps. Mobile editing suites can synthesize B-roll, fill plates, or localize copy offline; broadcast switchers can transcribe or rephrase commentary in real time without sending feeds to a data center. Privacy-sensitive creators—journalists, therapists, classroom streamers—prefer on-device models to avoid uploading raw footage, while game studios eye accelerators inside consoles to procedurally author worlds as players explore.

Although TRL 6 hardware is already shipping in flagship devices, software ecosystems lag behind. Toolmakers must refactor models for low-power envelopes, and regulators are debating whether on-device generative AI should still log provenance signals for watermarking regimes. Expect the next wave of mobile workstations and XR rigs to ship with dedicated diffusion blocks, while studios design assets assuming every viewer has a pocket inference engine capable of remixing media on demand.