Regulatory Sandboxes for Synthetic Minds

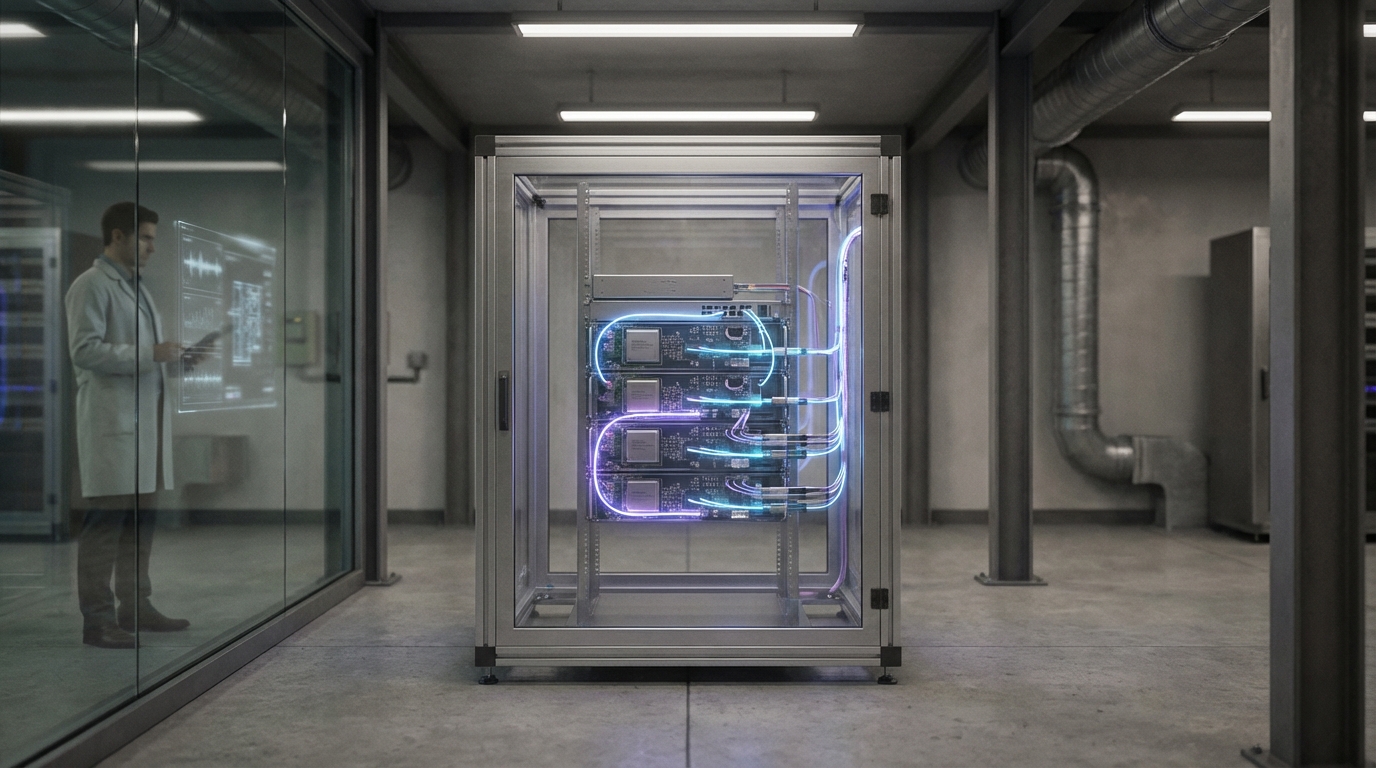

Regulatory sandboxes for synthetic minds are controlled, supervised environments where high-risk AI systems can be deployed, tested, and studied under close oversight before being allowed in broader deployment. These sandboxes enable regulators, researchers, and developers to work together to: test AI systems safely, observe emergent behaviors, develop and refine governance mechanisms, and co-evolve standards and regulations based on real-world experience with advanced AI systems.

This innovation addresses the challenge of regulating AI systems that are rapidly evolving and potentially risky, where traditional regulatory approaches may be too slow or restrictive. By providing controlled environments for experimentation, sandboxes allow for learning and adaptation while maintaining safety. The approach enables regulators to understand AI systems better, developers to test systems under supervision, and standards to evolve based on empirical evidence rather than speculation.

The technology is particularly valuable for frontier AI systems where risks and capabilities are not fully understood. As AI systems become more capable and potentially more dangerous, having safe environments to study them becomes crucial for developing appropriate governance. However, designing effective sandboxes that can contain risks while allowing meaningful experimentation remains challenging. The concept is being explored by regulators and researchers, though practical implementations are still developing.