Sensory Encoding Algorithms

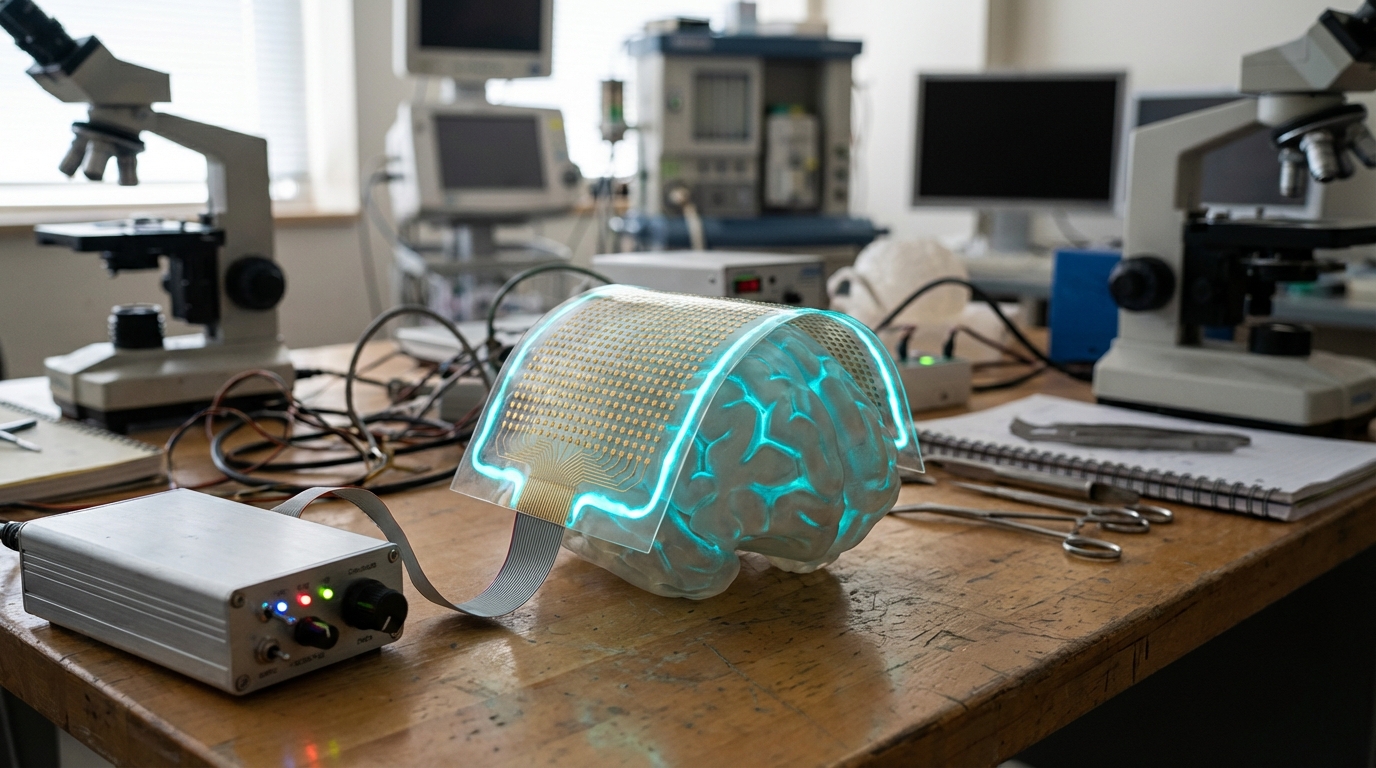

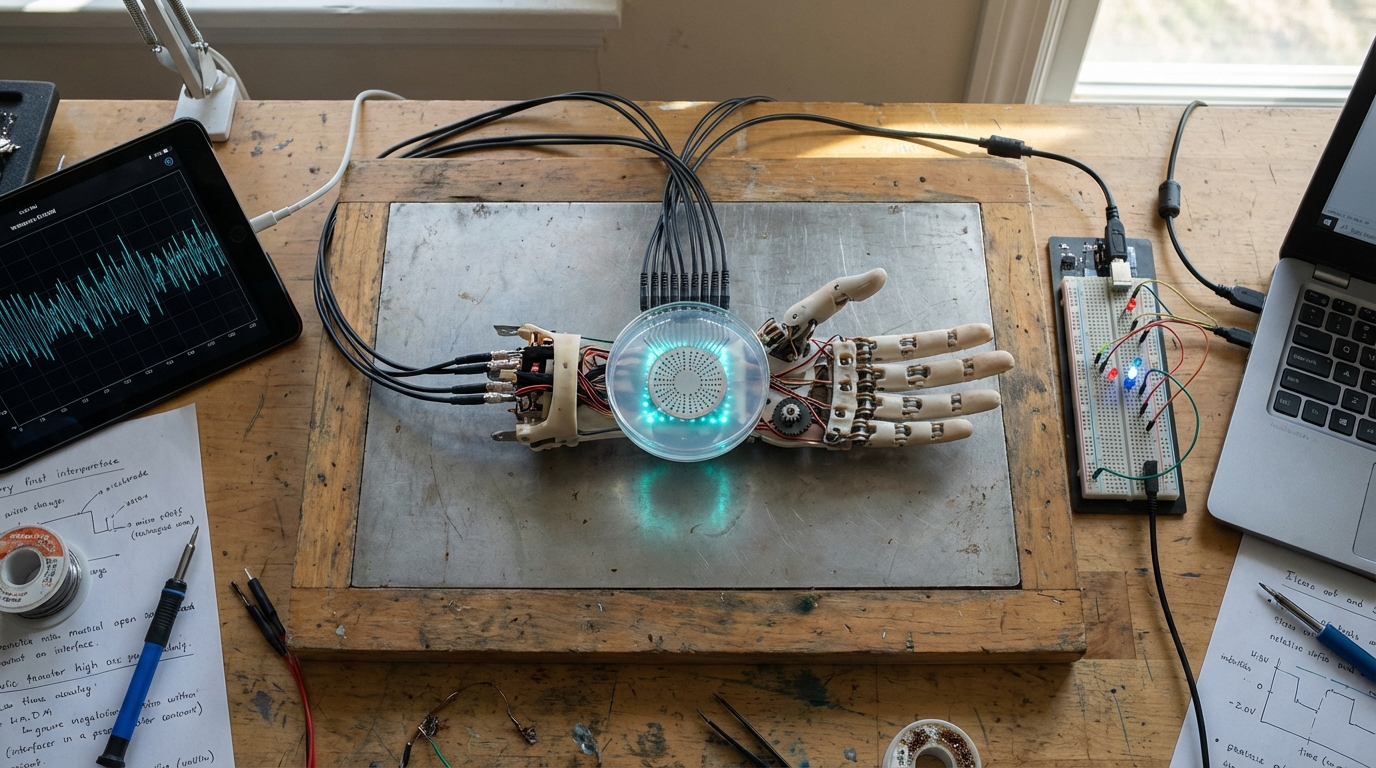

Sensory encoding algorithms are computational systems that convert digital data from cameras, sensors, or other sources into biomimetic neural stimulation patterns (like patterned microstimulation that mimics natural neural activity) that the brain can interpret as visual, tactile, or other sensory sensations, enabling artificial sensory input for people with sensory deficits. These algorithms translate visual information from cameras into patterns of electrical stimulation for the visual cortex (for blindness) or tactile information from sensors into stimulation patterns for somatosensory areas (for restoring touch), creating artificial qualia (subjective sensory experiences) by stimulating the brain in ways that mimic natural sensory processing.

This innovation addresses the challenge of restoring sensory function, where simply providing raw data to the brain doesn't create meaningful sensations. By encoding information in biomimetic patterns, these algorithms enable the brain to interpret artificial input as natural sensations. Research institutions are developing these technologies.

The technology is particularly significant for sensory prosthetics, where restoring vision or touch could dramatically improve quality of life. As the technology improves, it could enable more natural sensory restoration. However, understanding how to encode information effectively, ensuring the brain can interpret patterns, and achieving natural-feeling sensations remain challenges. The technology represents an important direction for sensory prosthetics, but requires extensive research to understand how the brain processes sensory information. Success could restore sensory function for people with deficits, but the technology must overcome fundamental challenges in understanding sensory encoding in the brain.