Wearable biometric emotion recorders

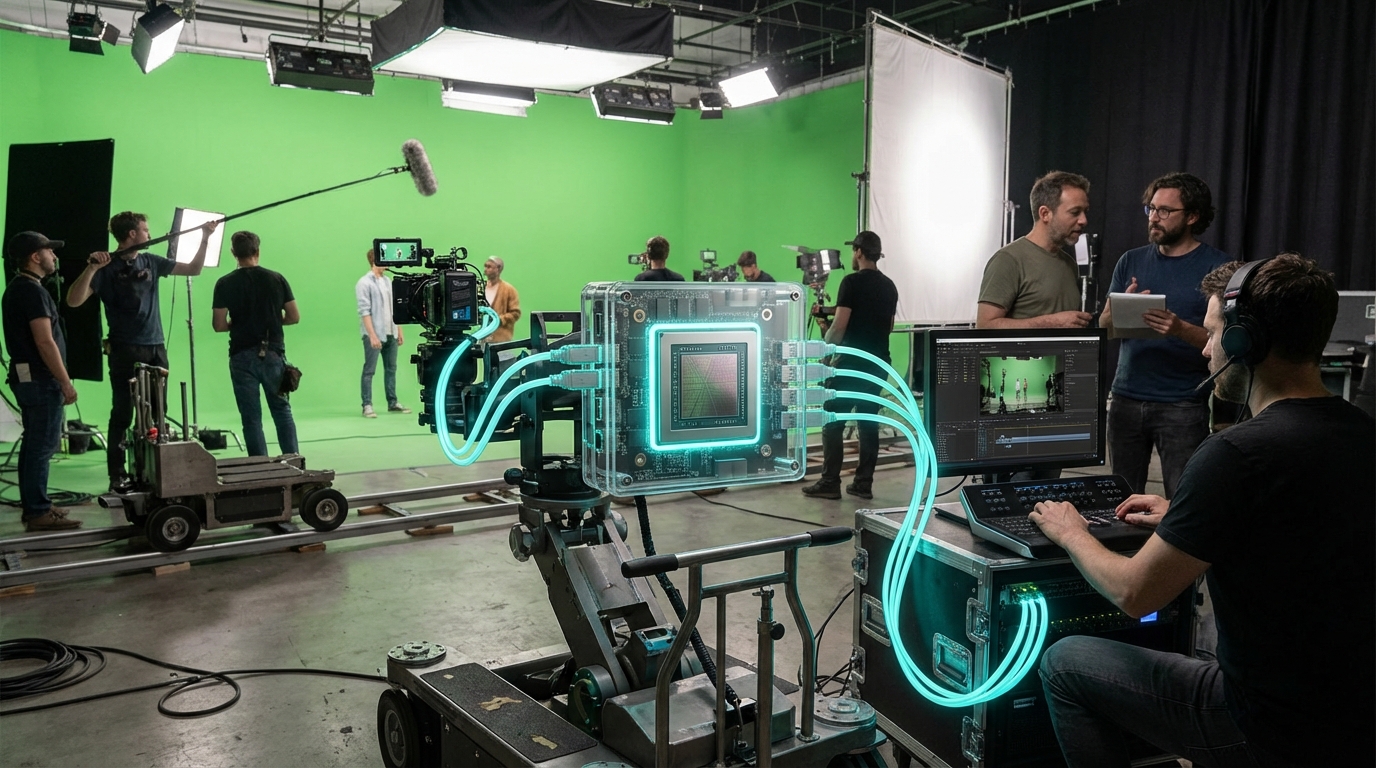

Wearable biometric emotion recorders fuse PPG, EDA, EMG, and occasionally EEG-lite sensors inside garments, earbuds, or jewelry to stream second-by-second affect data. Signal-processing pipelines use multimodal fusion to translate micro changes in heart-rate variability, perspiration, and facial muscle tension into arousal and valence estimates, then compress that stream into metadata that can be injected into engines like Unreal or Unity. The stack often includes on-device inference for privacy and latency with encrypted uplinks for aggregate analysis.

Studios, streamers, and brand labs experiment with these devices to understand how audiences react to story beats, music drops, or interactive choices. Live service games already tap emotion telemetry to adapt encounter difficulty, while K-drama platforms in Korea test biometric watch parties that synchronize lighting and haptics with viewers’ collective mood. Marketers eye the tech for attention insights that go beyond panel surveys, prompting new debates about consent and the commercialization of affective data.

Despite TRL 5 maturity, scaled deployment hinges on interoperable emotion ontologies and policy guardrails. The IEEE P7014 working group and Europe’s AI Act both call for explicit opt-in and explainability around biometric inference, pushing vendors to build privacy dashboards and “emotion firewalls.” As standards solidify, biometric emotion channels are poised to become an opt-in layer for immersive media analytics, powering adaptive storytelling while forcing studios to treat affect data like other sensitive personal information.