Automated Essay Scoring

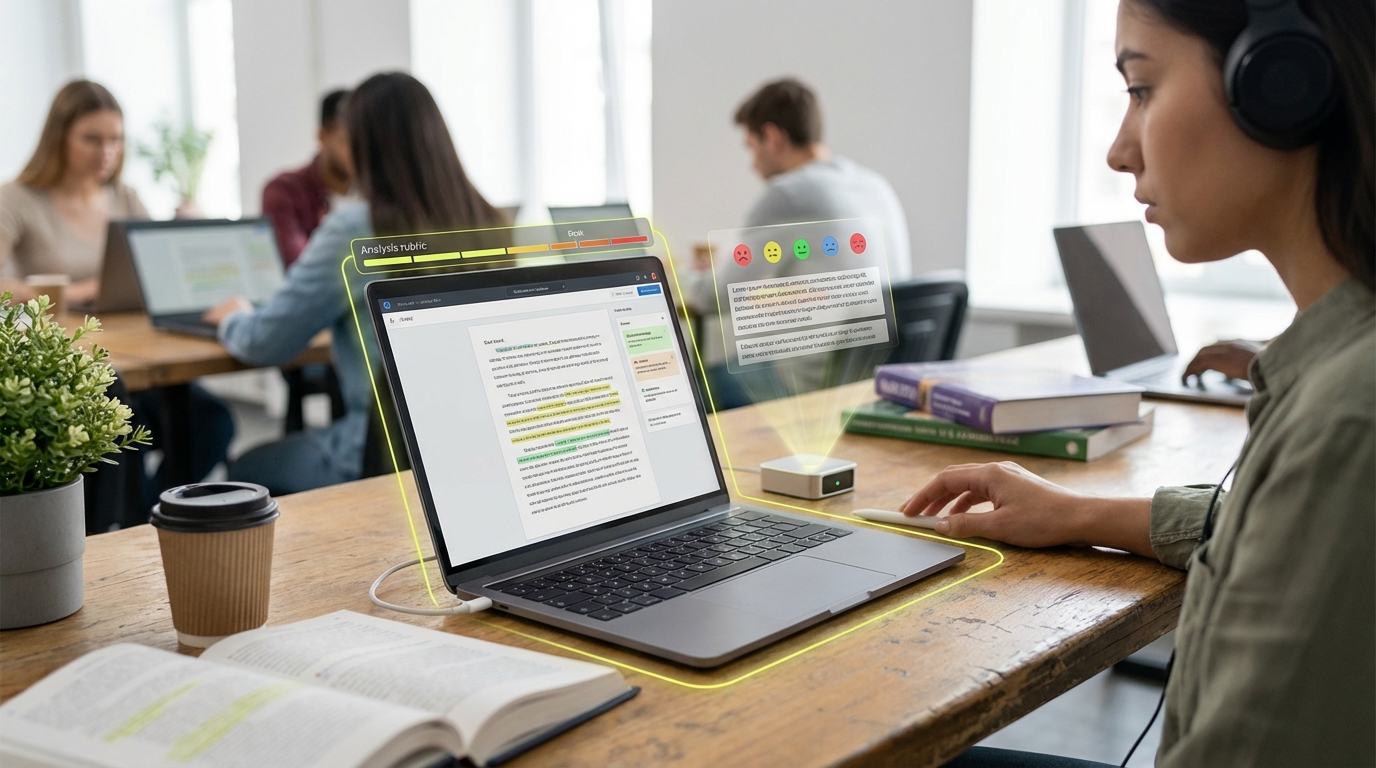

Automated essay scoring systems use natural language processing (NLP) and machine learning algorithms to assess written work, analyzing grammar, syntax, coherence, argument structure, evidence use, and other writing quality indicators to provide scores and feedback aligned with specific rubrics. These systems can evaluate essays, short answers, and other written responses, providing instant feedback and scores that are consistent with human grading while freeing instructors to focus on higher-order feedback, coaching, and instruction. Advanced systems provide explainable scoring that shows which aspects of writing contributed to the score, enabling learners to understand their strengths and areas for improvement.

This innovation addresses the time-intensive nature of grading written work, where providing detailed feedback on student writing requires significant instructor time and can create delays in feedback. By automating scoring and basic feedback, these systems enable faster turnaround times and allow instructors to focus on more sophisticated feedback and instruction. Companies like ETS, various educational technology platforms, and assessment providers have developed these systems, with some already widely used in standardized testing and educational settings.

The technology is particularly significant for scaling writing assessment and feedback, where automated scoring can provide consistent, immediate feedback that supports learning. As NLP capabilities improve, automated scoring could become more sophisticated and widely adopted. However, ensuring scoring accuracy, managing concerns about algorithmic bias, maintaining alignment with human grading, and ensuring that automated feedback supports learning remain challenges. The technology represents an important tool for scaling writing assessment, but requires careful validation and implementation to ensure effectiveness and fairness.