World-Model Planning Engines

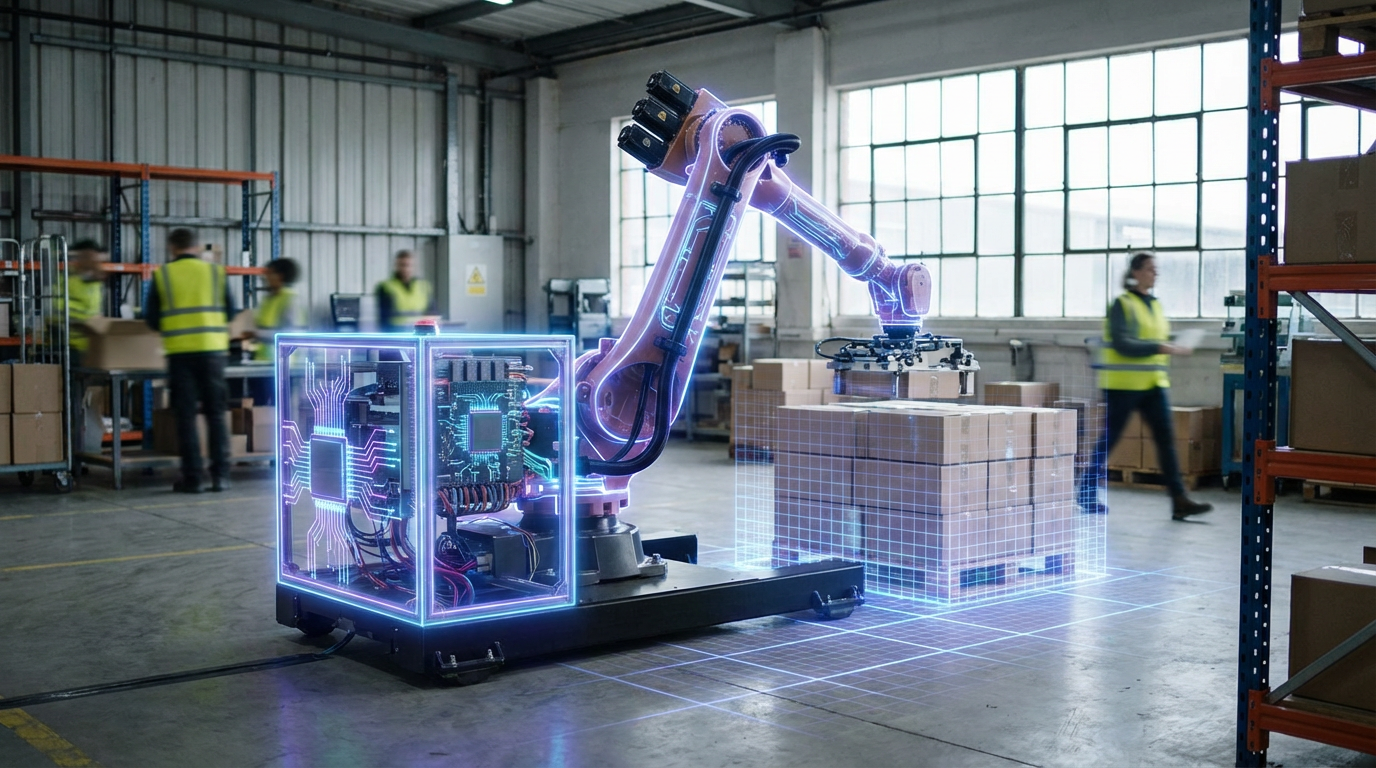

World-model planning engines learn compressed, latent representations of how environments work—predicting how states change in response to actions—and use these models with planning algorithms to reason about long-horizon sequences of actions. By learning world dynamics separately from decision-making, these systems can simulate possible futures, evaluate different strategies, and plan complex sequences of actions without requiring extensive trial-and-error in the real world.

This innovation addresses the sample efficiency problem in reinforcement learning, where agents typically require millions of interactions to learn effective policies. By learning predictive models of the world, agents can "imagine" the consequences of actions and plan ahead, dramatically reducing the amount of real-world experience needed. Research has demonstrated that world models enable more efficient learning and better generalization, with applications in robotics, game playing, and autonomous systems.

The technology is particularly valuable for applications where real-world experience is expensive, dangerous, or slow to obtain, such as robotics, autonomous vehicles, and complex decision-making tasks. As AI systems are deployed in real-world applications requiring sophisticated planning and reasoning, world models provide the predictive capabilities needed for effective long-horizon decision-making. However, learning accurate world models remains challenging, especially for complex, high-dimensional environments, and model errors can lead to poor planning decisions.