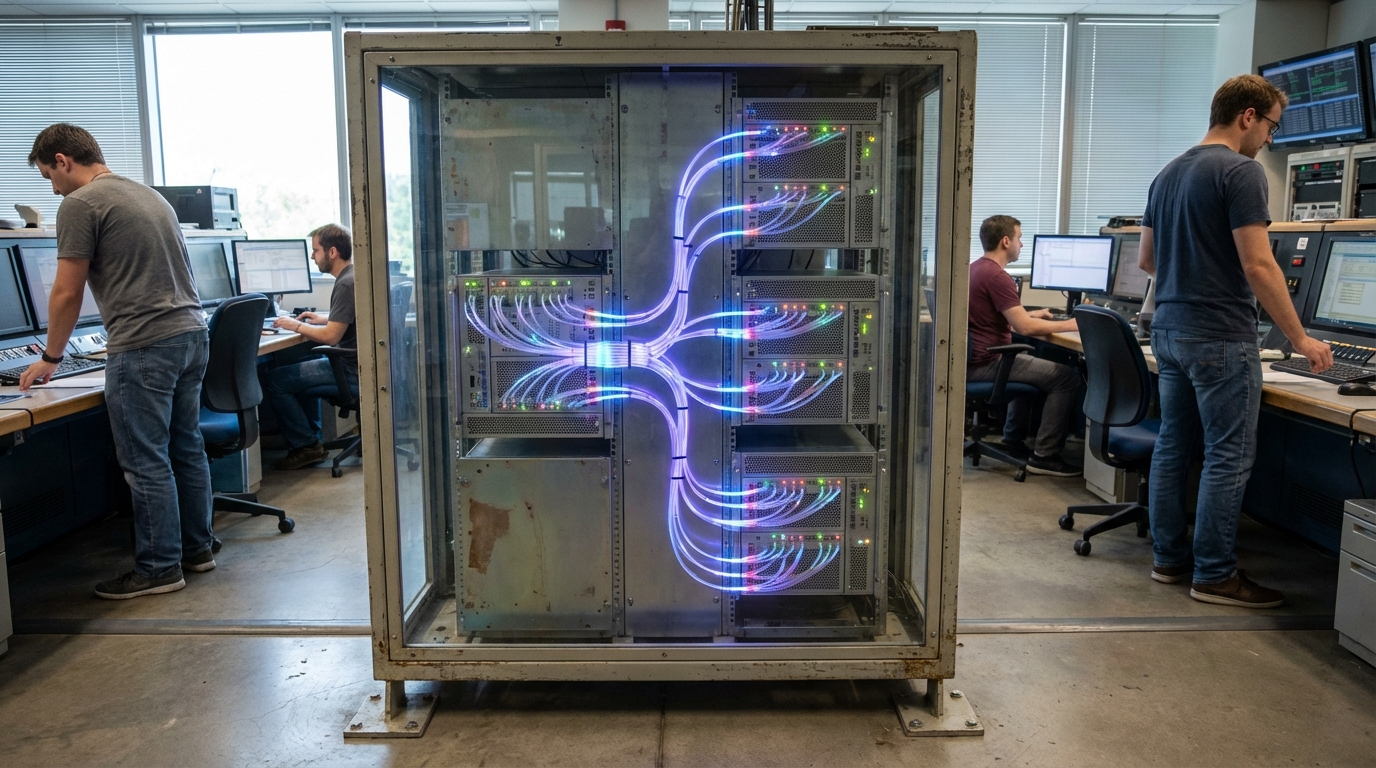

Mixture-of-Experts Model Platforms

Mixture-of-experts (MoE) model platforms use architectures where large language models are divided into thousands of specialized expert subnetworks, with a routing mechanism that dynamically selects which experts process each input token. This sparse activation approach means only a fraction of the model's parameters are active for any given input, dramatically reducing computational cost while maintaining model capacity and performance.

This innovation addresses the cost and scalability challenges of deploying large language models, where full model activation is prohibitively expensive for many applications. By activating only relevant experts for each input, MoE systems can achieve state-of-the-art performance at a fraction of the computational cost, enabling more cost-effective deployment of large models. Companies like Google (with models like PaLM and Gemini), Mistral AI, and various cloud providers are deploying MoE architectures, making large-scale AI more accessible.

The technology is particularly significant for enterprise AI applications where cost efficiency is critical, such as AI copilots, search systems, and research workloads. As AI models continue to grow in size, MoE architectures offer a pathway to scaling that maintains performance while controlling costs. The technology is becoming standard for large-scale language model deployment, enabling new business models and applications that were previously economically unviable.